|

Domain Adaptation Project

Team Members |

|

| Motivation |

Domain Transformations |

Max-margin Transforms |

Domain Discovery | Results | Downloads | References |

|

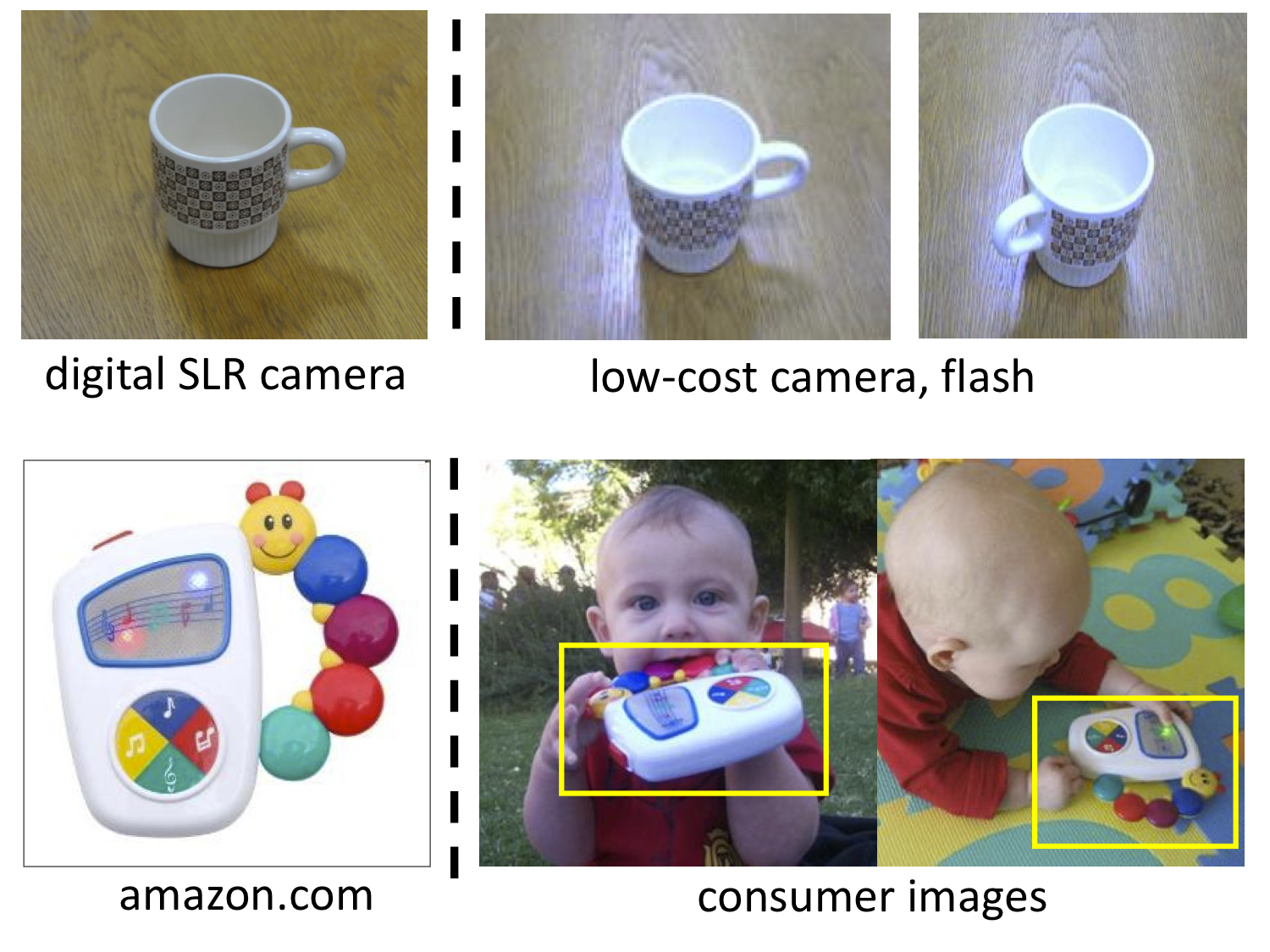

While the vast majority of object recognition methods today are trained and evaluated on the same image distribution, real world applications often present changing visual domains. In general, visual domains could differ in some combination of (often unknown) factors, including scene, intra-category variation, object location and pose, view angle, resolution, motion blur, scene illumination, background clutter, camera characteristics, etc. Recent studies have demonstrated a significant degradation in the performance of state-of-the-art image classifiers due to domain shift from pose changes (Farhadi and Tabrizi, 2008), a shift from commercial to consumer video (Duan et al, 2009, 2010), and, more generally, training datasets biased by the way in which they were collected (Torralba and Efros, 2011). |

To address this problem, we have been developing domain adaptation algorithms to transfer knowledge from visual recognition systems trained on some available labeled data to the real world of natural images. Such as having training (labeled) data from the left and trying to classify real world images on the right.

| Top |

|

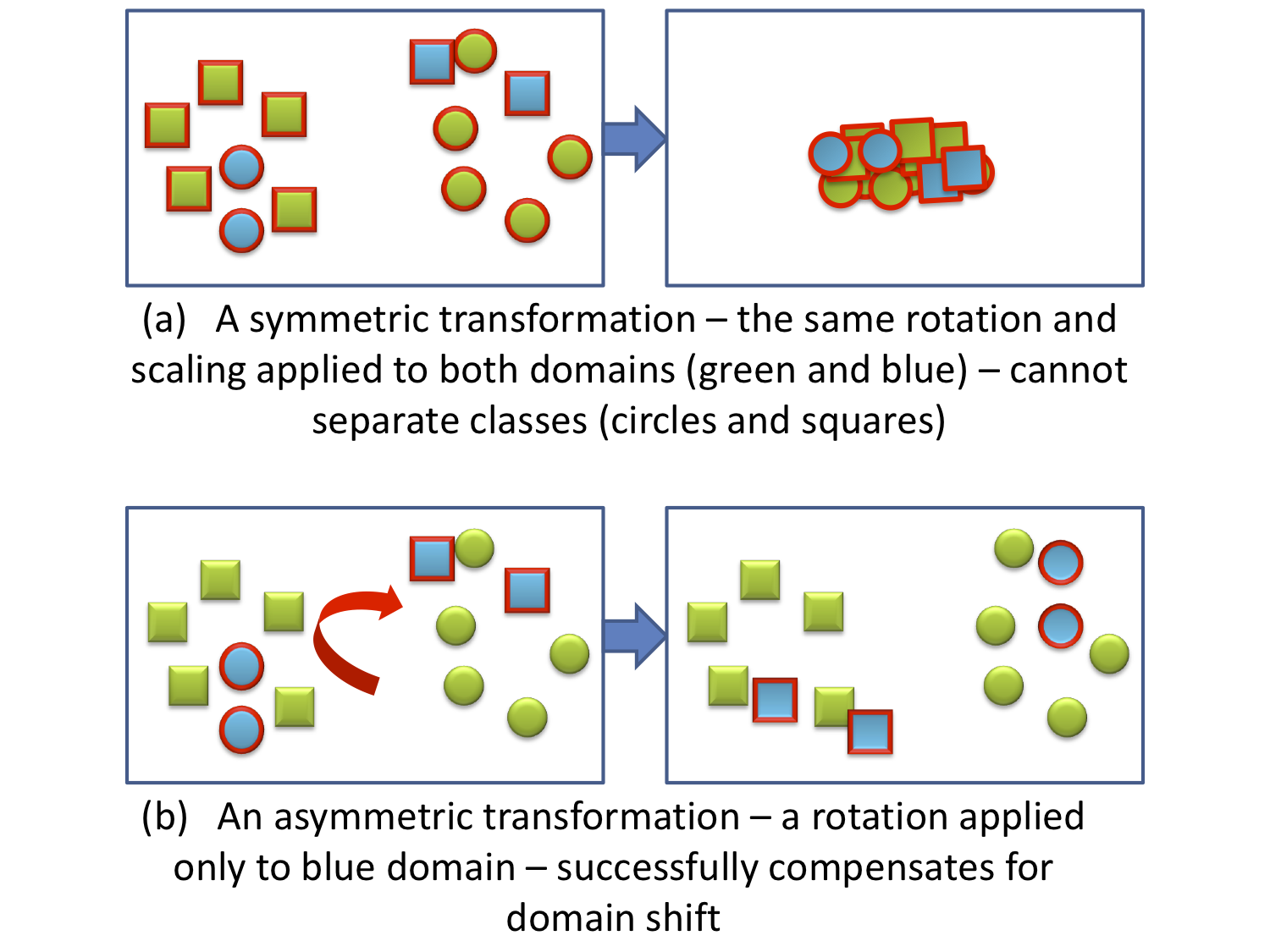

Domain transformations offer a flexible model for both supervised and unsupervised learning that allows us to transfer category independent information between domains. Previously, we show how to extend metric learning methods towards domain adaptation [1], allowing for learning metrics independent of the domain shift and the final classifier used. Understanding that not all domain shifts can be captured by a symmetric positive definite transform, we then extended this to learn a representation via an asymmetric, category independent transform [2]. Our framework can adapt features even when the target domain does not have any labeled examples for some categories, and when the target and source features have different dimensions. Data and Code available. |

|

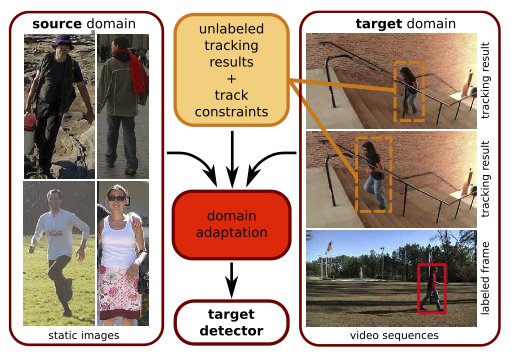

We also developed an extended method which can incorporate within domain instance constraints to learn more accurate transformations with little or no labeled target training data [4]. For example, tracking constraints could incorporated into the learning framework to make sure that images from the same track would be eventually classified similarly. |

|

| Top |

|

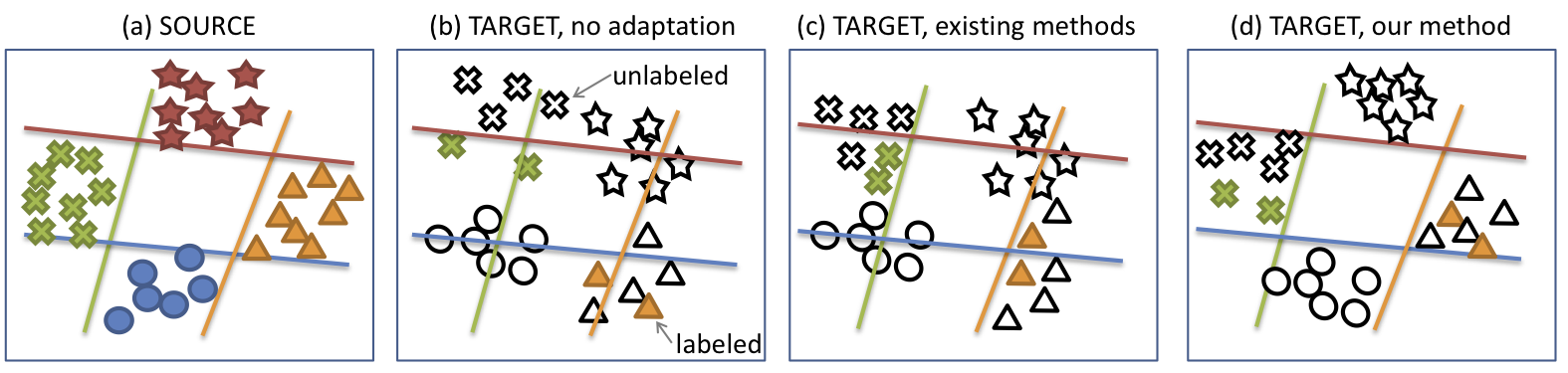

Max-margin Domain Transformations (MMDT) [5] is an algorithm which combines the representational power of our feature transformation methods with the robustness of a max-margin classifier. MMDT uses a transform W to map target features x to a new representation W x maximally aligned with the source, learning the transform jointly on all categories for which target labels are available (See figure below). |

|

|

MMDT provides a way to adapt max-margin classifiers in a multi-class manner, by learning a shared component of the domain shift as captured by the feature transformation W . Additionally, MMDT can be optimized quickly in linear space, making it a feasible solution for problem settings with a large amount of training data. |

| Top |

Recent domain adaptation methods successfully learn cross-domain transforms to map points between source and target domains. Yet, these methods are either restricted to a single training domain, or assume that the separation into source domains is known a priori. However, most available training data contains multiple unknown domains. We learn to separate the a dataset which contains multiple unknown domains into several latent domains.

|

Our discovery method is based on a novel hierarchical clustering technique that uses available object category information to constrain the set of feasible domain separations. An example domain separation learned using our algorithm is shown above. Data and Code available.

| Top |

Adaptation Datasets

- Office Dataset: Contains 3 domains Amazon, Webcam, and Dslr. Each contain images from amazon.com, or office environment images taken with varying lighting and pose changes using a webcam or a dslr camera, respectively. Contains 31 categories in each domain. SURF BoW histogram features, vector quantized to 800 dimension (unless indicated as 600).

- Office-Caltech Dataset Contains the 10 overlapping categories between the Office dataset and Caltech256 dataset. SURF BoW historgram features, vector quantized to 800 dimensions are also available for this dataset

- Bing-Caltech Dataset Contains all 256 categories from Caltech256 and is augmented with 300 web images per category that were collected through textual seach using Bing. The features available are the classeme features computed by the original proposers of the dataset.

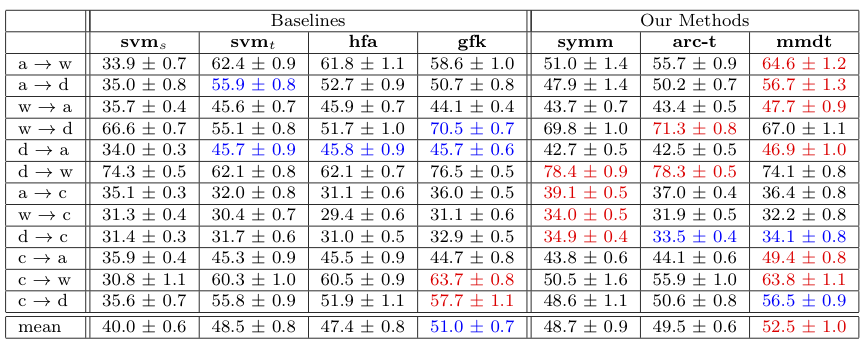

Standard Semi-supervised Domain Adaptation Experiments

-

Office-Caltech Dataset uses 20 source examples per category if source is Amazon,

otherwise 8 examples per source category. Uses 3 labeled examples per target category. Results in the table below are averaged across 20 train/test

splits available under the dataset download section. Note: following Gong et al. (2012) we use zscore to normalize our features.

- Baselines: gfk, Gong et al. (2012); hfa, Duan et al. (2012).

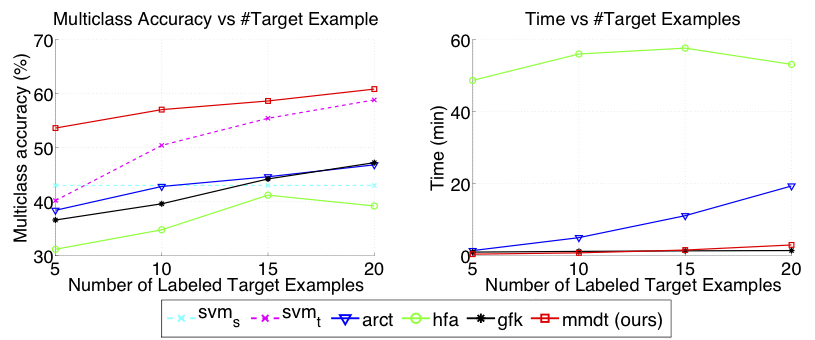

-

Bing-Caltech Dataset uses only the first 30 categories available.

Uses 50 labeled source examples per category and varies the number of labeled target categories (indicated on x-axis).

Train/test splits are available from the authors of this dataset (links below).

| Top |

- Transformation Learning code [1,2]

- For demo script download Office dataset.

- Domain Discovery and Multisource Domain Transforms code [3]

- Dependencies: Transformation Learning code, Libsvm

- For demo script download Bing-Caltech dataset.

- Max Margin Domain Transforms code [5]

- Dependencies: Transform Learning code, Liblinear-weights

- For demo script download Office-Caltech dataset and the 20 Train/Test splits.

- Office Dataset: Images / surf features / surf features with objIds / decaf features

- Office-Caltech Dataset and GFK code.

- 20 Train/Test splits used for standard domain adaptation experiment

- Bing-Caltech Dataset

| Top |

[1] K. Saenko, B. Kulis, M. Fritz and T. Darrell, "Adapting Visual Category Models to New Domains" In Proc. ECCV, September 2010, Heraklion, Greece.

[2] B. Kulis, K. Saenko, and T. Darrell, "What You Saw is Not What You Get: Domain Adaptation Using Asymmetric Kernel Transforms" In Proc. IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2011.

[3] J. Hoffman, B. Kulis, T. Darrell, and K. Saenko, "Discovering Latent Domains For Multisource Domain Adaptation" In Proc. ECCV, October 2012, Florence, Italy.

[4] J. Donahue, J. Hoffman, E. Rodner, K. Saenko, and T. Darrell, "Semi-Supervised Domain Adaptation with Instance Constraints" In Proc. CVPR, June 2013, Oregon, USA.

[5] J. Hoffman, E. Rodner, J. Donahue, K. Saenko, and T. Darrell, "Efficient Learning of Domain Invariant Image Representations" In Proc. ICLR, May 2013, Arizona, USA.

| Top |

Judy Hoffman |

Erik Rodner |

Jeff Donahue |

Kate Saenko |

Trevor Darrell |

Brian Kulis |

|