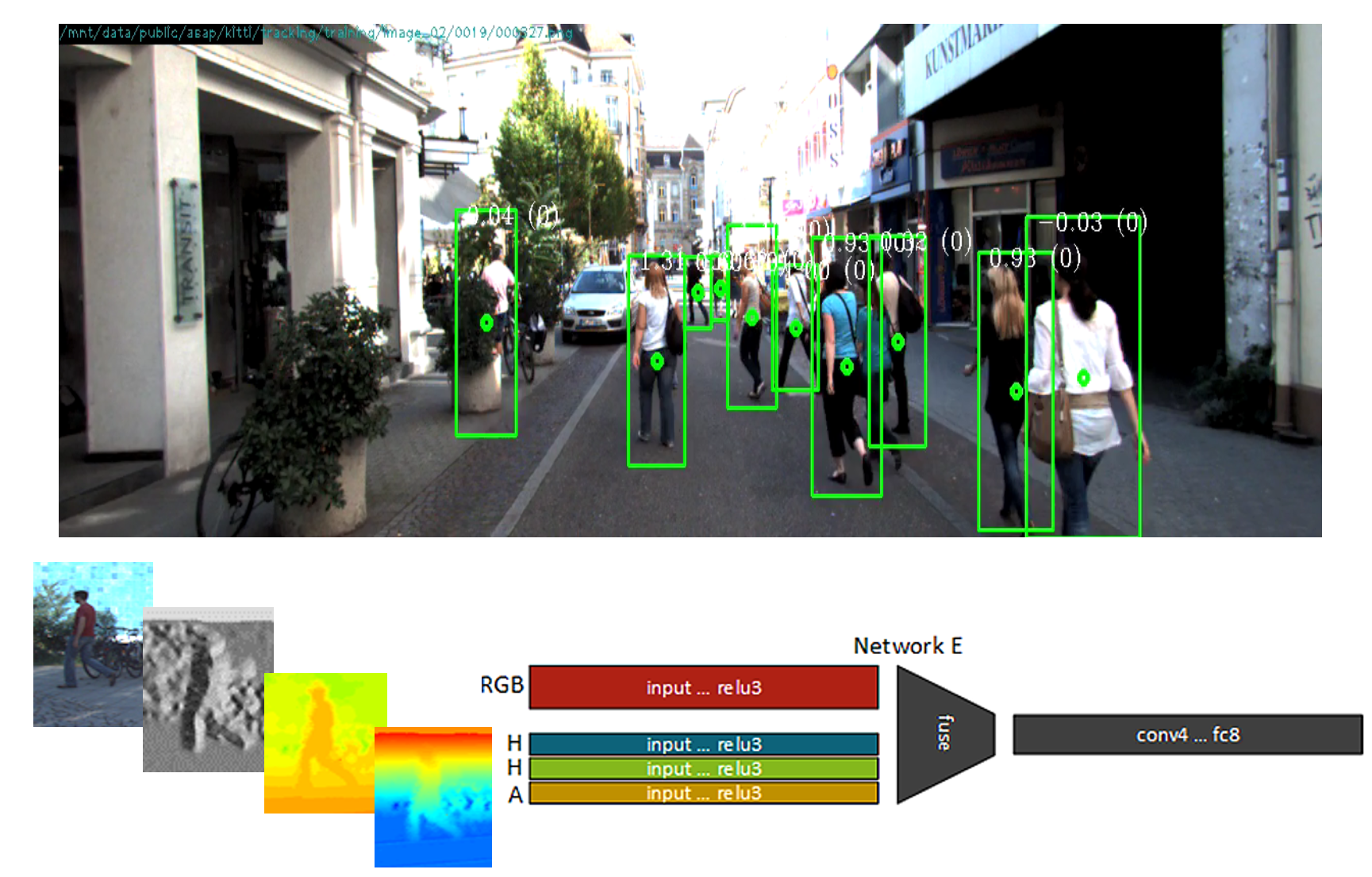

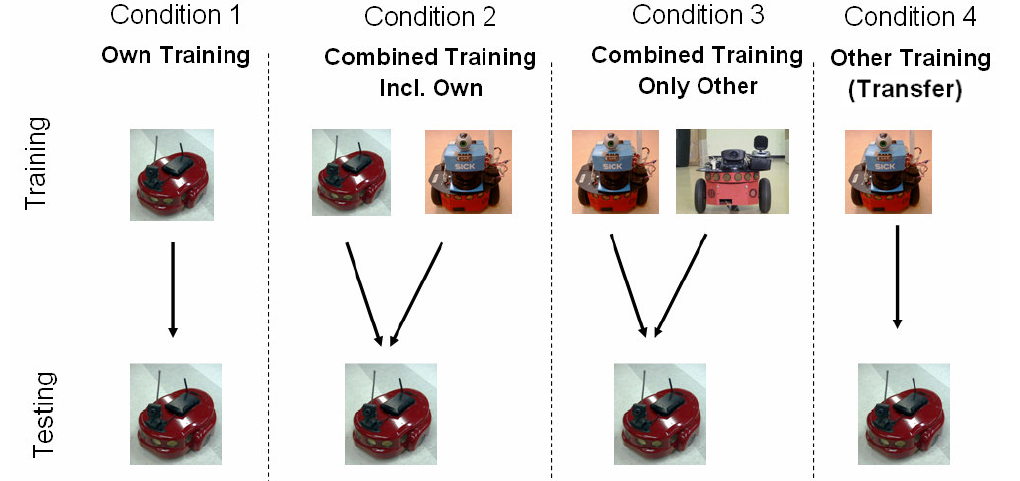

Knowledge transfer across heterogeneous robots: In my thesis I showed that mid-level representations are useful for learning object models (in the form of Gaussian Mixture Models) and transferring them across heterogeneous robots with differing sensors. Of course, feature learning has come a long way since then (starting wih sparse coding) and we extended these mehods. These principles have been shown relevant in the age of deep learning, where a hierarchy of features have been shown to be extremely transferrable, albeit with some fine-tuning on labeled examples. Our clustering work for cross-task learning (see above) extends this line of work when there is no labeled data, and will be applied to heterogeneous robot teams in future work.

- Kira, Z., "Inter-Robot Transfer Learning for Perceptual Classification", in proceedings of the 9th International Conference on Autonomous Agents and Multiagent Systems (AAMAS), 2010. [pdf]

- Kira, Z., Communication and Alignment of Grounded Symbolic Knowledge Among Heterogeneous Robots, Ph.D. Dissertation, College of Computing, Georgia Institute of Technology, May 2010. [pdf]