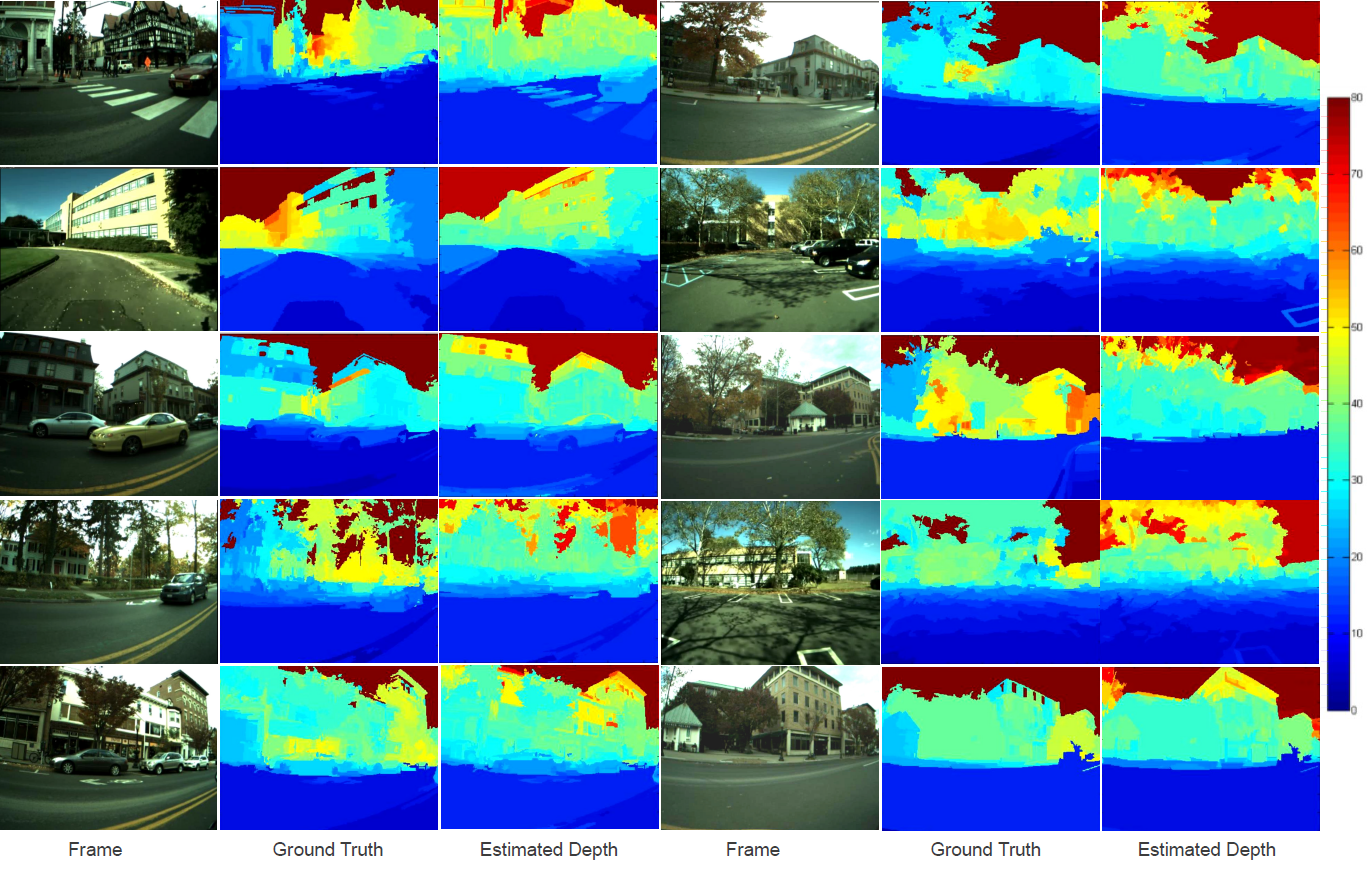

Examples of videos scenes, ground truth, and predicted depth by our method. Legend shows depth range from 0m (blue) to 80m (red). |

| Authors |

|

S. Hussain Raza |

| Abstract |

| We present an algorithm to estimate depth in dynamic video scenes.We present an algorithm to estimate depth in dynamic video scenes. We propose to learn and infer depth in videos from appearance, motion, occlusion boundaries, and geometric context of the scene. Using our method, depth can be estimated from unconstrained videos with no requirement of camera pose estimation, and with significant background/foreground motions. We start by decomposing a video into spatio-temporal regions. For each spatio-temporal region, we learn the relationship of depth to visual appearance, motion, and geometric classes. Then we infer the depth information of new scenes using piecewise planar parametrization estimated within a Markov random field (MRF) framework by combining appearance to depth learned mappings and occlusion boundary guided smoothness constraints. Subsequently, we perform temporal smoothing to obtain temporally consistent depth maps. To evaluate our depth estimation algorithm, we provide a novel dataset with ground truth depth for outdoor video scenes. We present a thorough evaluation of our algorithm on our new dataset and the publicly available Make3d static image dataset. |

| Paper |

| Video |

| Citation |

@article{VideoDepth2014,

author = {S. Hussain Raza and Omar Javed and Aveek Das and Harpreet Sawhney and Hui Cheng and Irfan Essa},

title = {Depth Extraction from Videos Using Geometric Context and Occlusion Boundaries},

journal = {BMVC},

year = {2014},

} |

| Dataset |

| You can download the original videos, and ground-truth. videoDepth_dataset For questions, please email e-mail. |

| Funding |

This research is supported by:

|

| Copyright |

The documents contained in these directories are included by the contributing authors as a means to ensure timely dissemination of scholarly and technical work on a non-commercial basis. Copyright and all rights therein are maintained by the authors or by other copyright holders, notwithstanding that they have offered their works here electronically. It is understood that all persons copying this information will adhere to the terms and constraints invoked by each author's copyright. These works may not be reposted without explicit permission of the copyright holder. |

|