|

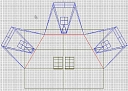

Fire

Department Training: The fire command training simulation allows firefighters to practice fighting a fire in a single story dwelling. The user of the system is able to direct firefighters to different sections of the house in order to optimally fight the fire. |

|

Situational

Visualization: Situational Visualization integrates geo-spatial data from a number of sources and uses techniques from visualization, augmented reality, virtual reality, and ubiquitous computing to give a user the tools and ability needed to comprehend and use this information. You can think of this as a mobile, context-aware visualization of a user's surrounding environment. |

|

Real-time 3D

Weather: A system is being developed to permit visual exploration of 3D, dynamic

weather as it develops. The weather results are acquired in real-time

from 3D Doppler radars and from other observations. These results are displayed

along with accurate terrain, building, and street models. Multiscale weather

simulations have also been integrated to permit accurate comparison and

prediction. Ultimately the system will provide comprehensive, highly

detailed weather visualization and analysis across the entire U.S. |

|

VGIS:

VGIS (Virtual Geographic Information System) is a large, multifaceted project to allow navigation of and interaction with very large and high resolution, dynamically changing databases while retaining real-time display and interaction.

The system allows users to navigate accurate geographies (less than 1 meter resolution in some cases) with sustained frame rates of 15-20 frames per second. The user can not only see these terrains from any viewing angle but also buildings, roads, high resolution imagery draped on the terrain, and other features

|

|

|

Blindness Simulation: Family members of people stricken with certain types of partial blindness sometimes don't understand why

their loved ones can partially see but still find it difficult to function. We have

simulated various kinds of blindness conditions in a simple city virtual

environment containing obstacles. The user wears a head-mounted display and moves around the cluttered space, trying to avoid obstacles. We

simulate tunnel vision, loss of central vision, and loss of the left or right side of the visual field. We are working on lens distortion

effects to simulate macular degeneration and lens or cornea irregularities.

|

|

Meditation

Chamber: The goal of this research is design and build an immersive virtual environment that uses visual, audio, and tactile cues to create, guide, and maintain a patient's guided relaxation and meditation experience. The use of meditation and guided imagery is well established as helpful in the treatment and prevention of a number of diseases with high cost in both financial terms and human suffering. |

|

Pain

Distraction: This environment is intended to help ease the minds of children who are undergoing cancer treatment by putting them in another world. This will be done through an interactive game environment that will draw their attention away from the pain and discomfort experienced during cancer treatment. |

|

BNAVE: We are currently developing an immersive virtual environment display for research into the rehabilitation of balance disorders, called the Balance NAVE (BNAVE). Using this system, the therapist can create varying degrees of sensory conflict and congruence in persons with balance disorders. With the capability of changing visual scenes based on the needs of the therapist, the BNAVE is a promising tool for rehabilitation. |

|

NAVE:

The Non-expensive Automatic Virtual Environment, is a low-cost, PC-driven, multi-screen, multi-user, stereoscopic, multi-sensory virtual environment that preserves many of the desirable elements of the original CAVE environment but that can be built at a fraction of the cost of a CAVE. |

|

Virtual Workbench:

The Virtual Workbench is a non-immersive virtual reality display system. A user views a large stationary display that generates stereoscopic imagery similar to that used in 3D movies. The user's head position is accounted for and users can move objects with hand-held devices. |

![]()