AR/VR Researchers Bring Immersive Experience to News Stories

It hasn’t been long since consumers put down the newspaper and picked up their phones to get their news.

It may not be long before augmented reality/virtual reality (AR/VR) headsets cause them to keep their phones in their pockets when they want to read The New York Times or The Washington Post.

Data visualization and AR/VR researchers at Georgia Tech are exploring how users can interact with news stories through AR/VR headsets and are determining which stories are best suited for virtual presentation.

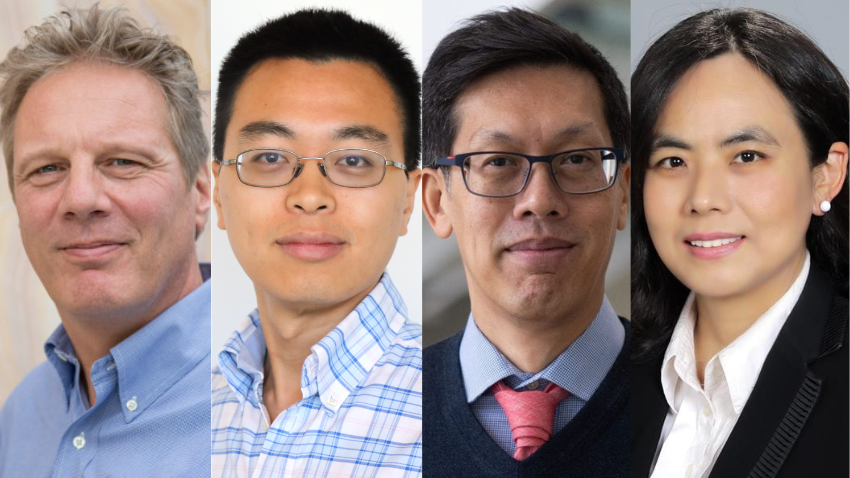

Tao Lu, a Ph.D. student at the School of Interactive Computing, Assistant Professor Yalong Yang, and Associate Professor Alex Endert led a recent study that they say is among the first to explore user preference in virtually designed news stories.

[Explore Georgia Tech @ CHI2025]

The researchers will present a paper they authored based on the study at the 2025 Conference on Human Factors in Computing Systems this week in Yokohama, Japan.

Digital platforms have elevated explanatory journalism, which provides greater context for a subject through data, images, and in-depth analysis. These platforms also allow stories to be more visually appealing through graphic design and animation.

Lu said AR/VR can further elevate explanatory journalism through 3D, interactive spatial environments. He added that media organizations should think about how the stories they produce will appear in AR/VR as much as they think about how they will appear on mobile devices.

“We’re giving users another option to experience the story and for designers and developers to show their stories in another modality,” Lu said.

“A screen-based story on a smartphone is easy to use and cost-effective. However, some stories are better presented in AR/VR, which will become more popular as technology gets cheaper. AR/VR can provide 3D spatial information that would be hard to understand on a phone or desktop screen.”

Active or Passive Interactions?

Using Meta’s Oculus Quest 3, the researchers and their collaborators created four immersive virtual reality simulations from web-based news stories produced by The New York Times:

- Why opening windows was key to classroom ventilation during the Covid-19 pandemic

- The destruction of Black homes and businesses in the Tulsa Race Massacre

- How climate change could create dramatic dangers in the Atlantic Ocean

- How 9/11 changed Manhattan’s financial district

The study aimed to determine whether users prefer to be actively or passively immersed in a story, whether from a first-person or third-person point of view, or a combination of these perspectives.

“We’re in the nascent stages of storytelling in VR,” said Endert, whose research specializes in data visualization. “We lack the design knowledge of which mode of immersion we should use if we want a certain reaction from the audience. Understanding design is at the crux of our study.”

Active immersion gives the user complete control over their experience. The classroom simulation offers a first-person point of view and allows users to teleport from one point in the classroom to another. New information from the story is presented each time the user moves to a new point.

The researchers acknowledged they could design a free-roaming simulation that allows users to walk freely within the classroom. However, they restricted that ability for this study due to safety concerns and lab space constraints.

In the Tulsa Race Massacre simulation, which uses a passive, first-person point of view, users follow a predefined route along one of Tulsa’s main streets. Information about each building is presented at each step.

The Atlantic Ocean simulation is an active, third-person experience. The user sees a representation of Earth and can select which interaction points to explore to learn new information.

The 9/11 simulation is a passive third-person experience. Each step includes a narrative paragraph with companion visual elements, and users proceed to the next step through a navigation trigger.

Finding the Right Balance

Lu said that first-person active enhances spatial awareness, while third-person passive improves contextual understanding. Journalists and VR designers must determine which presentation is most effective case by case.

Yang said the goal should be to balance interests in making those determinations, which might require compromise. Knowing how users prefer to consume news is critical, but journalists still have an editorial responsibility to decide what the public should know and how to present information.

“You have more freedom to explore in an active experience versus a passive experience,” Yang said. “But if you give them too much freedom, they might stray from your planned narrative and miss important information you think they should know. We want to understand how we can balance both ends of this spectrum and what the right level is that we can give people in storytelling.”

The study and others indicate that users retain information better when they feel like they are part of the story. Yang said the technology to make that possible isn’t there yet, but it’s coming along as wearable VR devices become more accessible.

The debate is whether these devices will become people's preferred technology for consuming content. According to the Pew Research Center, 86% of U.S. adults say they at least sometimes get their news from a smartphone, computer, or tablet.

“I believe AR and VR will be mainstream in the future and will replace everything, but I think there’s a transition period,” Yang said. “Older devices will exist and act as support. It’s an ecosystem.”