Pancaked Water Droplets Help Launch Europe’s Fastest Supercomputer

JUPITER became the world’s fourth fastest supercomputer when it debuted last month. Though housed in Germany at the Jülich Supercomputing Centre (JSC), Georgia Tech played a supporting role in helping the system land on the latest TOP500 list.

In November 2024, JSC granted Assistant Professor Spencer Bryngelson exclusive access to the system through the JUPITER Research and Early Access Program (JUREAP).

By preparing Europe’s fastest supercomputer for launch, the joint project yielded valuable simulation data on the effects of shock waves in medicine and transportation.

“The shock-droplet problem has been a hallmark test problem in fluid dynamics for some decades now. It is sufficiently challenging to study that it keeps me scientifically interested, though the results are manifestly important,” Bryngelson said.

“Understanding the droplet behavior in some extreme regimes remains an open scientific problem of high engineering value.”

Through JUREAP, JSC engineers tested Bryngelson’s Multi-Component Flow Code (MFC) on their computers. The project simulated how liquid droplets behave when struck by a large, high-velocity shock wave moving much faster than the speed of sound.

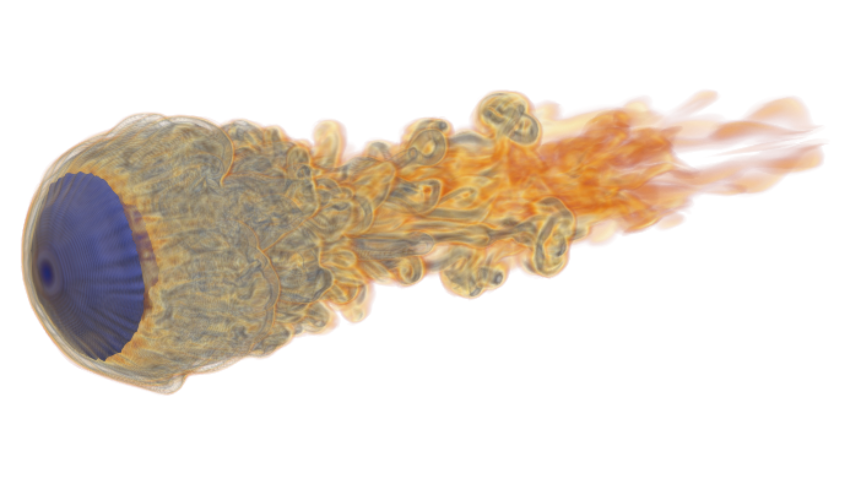

Tests produced visualizations of droplets deforming into pancake shapes before ejecting vortex rings as they broke apart from the shock wave. The experiments measured the swirls of air flow formed behind the droplets, known as vorticity.

Vorticity is one variable aerospace engineers consider when building aircraft designed to fly at supersonic and hypersonic speeds. Small droplets and vortices pose significant hazards for high-Mach vessels.

These computer models reduce the risk and cost associated with physical test runs. By simulating extreme scenarios, the JUREAP project demonstrated a safer and more efficient way to evaluate aerospace systems.

The human body is another fluid space where fast, high-energy flows can occur.

Simulations help medical researchers create less invasive shock wave treatments. This technology can be further applied for uses ranging from breaking up kidney stones to treating inflammation.

MFC’s versatility for large- and small-scale applications made it suitable for testing JUPITER in its early stages. The project’s success even earned it a JUREAP certificate for scaling efficiency and node performance.

“The use of application codes to test supercomputers is common. We’ve participated in similar programs for OLCF Frontier and LLNL El Capitan,” said Bryngelson, a faculty member with Georgia Tech’s School of Computational Science and Engineering.

“Engineers at supercomputer sites usually find and sort most problems on their own. But deploying workloads characteristic of what the JUPITER will run in practice stresses it in new ways. In these instances, we usually end up identifying some failure modes.”

The JSC and Georgia Tech researchers named their joint project Exascale Multiphysics Flows (ExaMFlow).

ExaMFlow helps keep JUPITER on pace to become Europe’s first exascale supercomputer. This designation refers to any machine capable of computing one exaflop, or one quintillion (“1” followed by 18 zeros) calculations per second.

All three systems that rank ahead of JUPITER are exascale supercomputers. They are El Capitan at Lawrence Livermore National Laboratory, Frontier at Oak Ridge National Laboratory, and Aurora at Argonne National Laboratory.

JUPITER calculates more than 60 billion operations per watt. This makes the supercomputer the most energy-efficient system among the top five.

ExaMFlow ran Bryngelson’s software on JSC’s JUWELS Booster and JUPITER Exascale Transition Instrument (JETI). The two modules form the backbone of JUPITER’s full design.

ExaMFlow’s report showed that MFC performed with near-ideal scaling behavior on JUWELS and JETI compared to similar systems based on NVIDIA A100 GPUs.

Access to NVIDIA hardware at Georgia Tech played a key role in ExaMFlow’s success.

The Institute hosts the Phoenix Research Computing Cluster, which includes A100 GPUs among its arsenal of components. Bryngelson’s lab owns NVIDIA A100 GPUs and four GH200 Grace Hopper Superchips.

Since JUPITER is equipped with around 24,000 Grace Hopper Superchips, Bryngelson’s work with the hardware proved especially insightful for the ExaMFlow project.

“The Grace Hopper chip is interesting. It’s not challenging to use like a regular GPU device when one is familiar with running NVIDIA hardware. The more fun part is using its tightly coupled CPU to GPU interconnect to make use of the CPU as well,” Bryngelson said.

“It’s not immediately obvious how to best do this, though we used a few tricks to tune its use to our application. They appear to work nicely.”

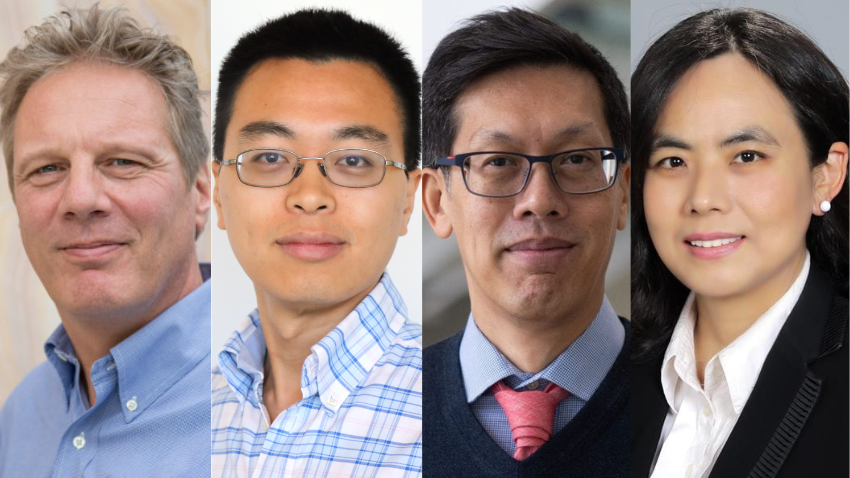

JSC researchers Luis Cifuentes, Rakesh Sarma, Seong Koh, and Sohel Herff played important roles in running Bryngelson’s MFC software on early JUPITER modules.

The ExaMFlow team included NVIDIA scientists Nikolaos Tselepidis and Benedikt Dorschner.

The pair observed their company’s hardware used in the field. They return to NVIDIA with notes that help the corporation build the next devices tailored to the need of scientific computing researchers.

“We try to be prepared for the latest, biggest computers. Being able to take immediate advantage of the largest systems is a valuable capability,” Bryngelson said.

“When the early access systems arrive, it’s a great opportunity for the teams involved to test the machines, demonstrate and tune scientific software, and meet very capable new collaborators.”