Autonomous Monitoring Approach Gives Farmers Detailed 4D Look at Crops

Researchers at the Georgia Institute of Technology, University of Georgia, and Georgia Tech Research Institute have developed a new computer vision-based method of autonomously monitoring agricultural crops that may lower costs, improve harvest yields, and ultimately provide more food to starving people around the world.

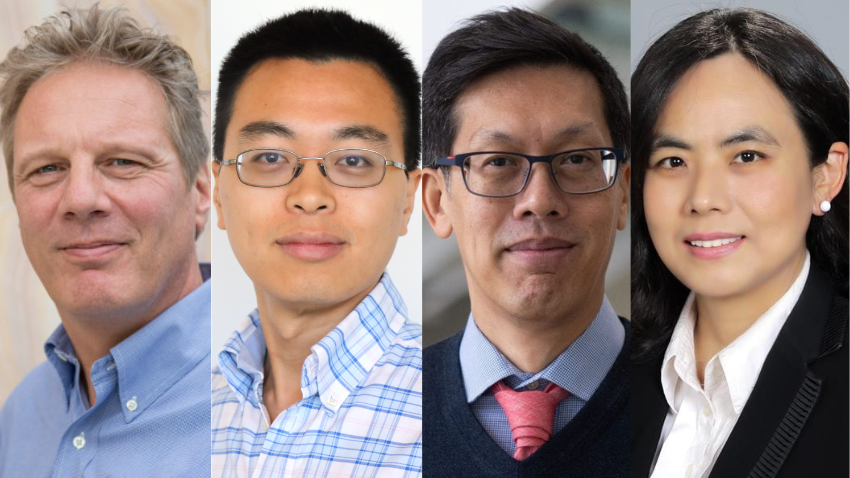

Going beyond current crop monitoring techniques used in precision agriculture applications, the Georgia Tech team – led by School of Interactive Computing (IC) Professor Frank Dellaert and Ph.D. student Jing Dong – has created a four-dimensional (4D = 3D + time) reconstruction approach that can provide farmers with detailed crop information including plant heights and growth rates.

Traditionally, crop monitoring has been done manually, which is very labor and cost intensive. Satellites and unmanned aerial and ground vehicles have reduced costs somewhat in recent years, but the amount of useful information that can be gathered from 2D images and 3D reconstructions is limited.

According to the researchers, existing technologies were developed for static scenes. For example, current 3D tools assume that each geometric entity identified in an image will maintain a constant appearance over time.

“Of course, crops are constantly growing, moving in the wind, and changing color, etc., which makes it very difficult to automate the precise alignment of static images over time,” said Dong.

“What we have been able to do is to account for the dynamic nature of continuously growing crops and animate a whole growing season’s worth of 3D images into a 4D reconstruction that reveals a bounty of useful information to farmers and other precision agricultural systems.”

At the heart of the team’s approach is a highly robust data association algorithm that solves the problem of connecting related images over time that have highly duplicated structures, significant lighting and appearance differences.

To fuel the algorithm, the team equipped a standard farm tractor with color imaging, GPS, inertial measurement, and other sensors to collect data from a peanut field in Tifton, Georgia. Data was harvested over 23 sessions spanning 89 days during the 2016 summer growing season.

However, using a tractor presented another hurdle for the team to overcome. Existing methods of creating spatio-temporal maps – a primary tool for location recognition – were designed for autonomous driving applications. In these situations, camera angles remain mostly static as a vehicle moves down the road.

A tractor moving through a cultivated field is a different story.

“So, not only are the plants always changing, the camera angles are constantly shifting as the tractor goes over bumps and through ruts in the soil. Multiple rows and repetitive structures exacerbate the problem even further,” said Dong.

To account for the dynamic nature of the data, the new approach computes geometric details about camera angles and positions, as well as visual landmarks and points of reference for each individual row.

This information, in turn, builds visual correspondences and links each of the 23 data sessions for each specific row. From here, the team uses a search and optimization method to reject possible false positive matches between rows and sessions and to eliminate other outliers that might adversely impact the study results.

The output was a 4D point cloud (a time series of 3D point clouds which are aligned into a single coordinate frame) with 36 million data points.

"With this, we can visualize multiple types of output like height curves, growth rate heat maps, and detailed local mesh models that are accessible by farmers or other precision agriculture systems,” said Dong. "All of this information is very useful for making decisions about irrigation, pest control, harvesting, crop rotations, and much more.

“There is still much to be done as we continue to analyze the data and explore potential machine learning applications, but our ultimate goal is to do all that we can to bring more food to people around the world impacted by food shortages and famine.”

The full paper, 4D Crop Monitoring: Spatio-Temporal Reconstruction for Agriculture, was presented at the 2017 IEEE International Conference on Robotics and Automation (ICRA), held in Singapore from May 29 to June 3.

Dellaert and IC Professor Byron Boots advise Dong. The research is supported by the U.S. Department of Agriculture under award number 2014-67021-22556.