Researchers Unleash 'Stanceosaurus' to Cross-culturally Combat Misinformation

Georgia Tech researchers and students are using machine learning tools to break through language barriers to help non-English speaking social media users be more aware of misinformation spreading online.

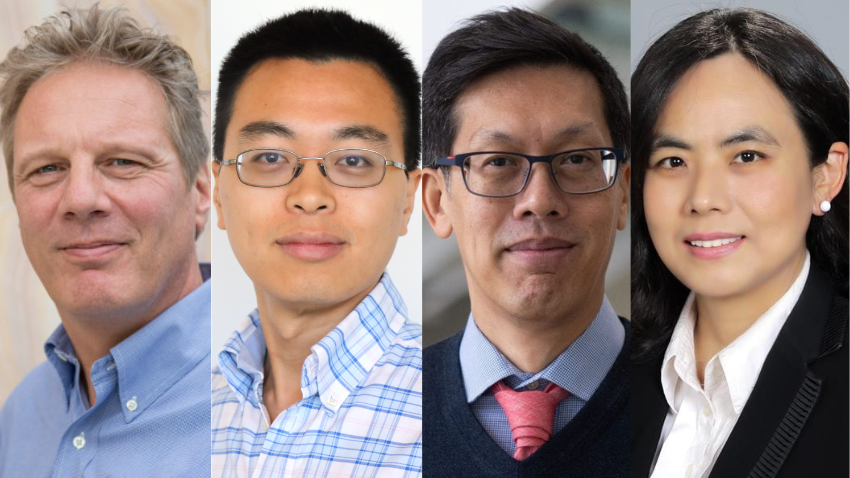

School of Interactive Computing associate professor Alan Ritter and assistant professor Wei Xu, along with students Jonathan Zheng, Ashutosh Baheti, and Tarek Naous, will introduce “Stanceosaurus” at the 2022 Conference on Empirical Methods in Natural Language Processing, which runs from Dec. 7-11 in Abu Dhabi, United Arab Emirates.

Consisting of 28,033 tweets in English, Hindi, and Arabic — each annotated with a pro or con stance toward 251 misinformation claims — the corpus was named “Stanceosaurus” because the researchers believe it to be the largest dataset of its kind. The project was funded in part by the Intelligence Advanced Research Projects Activity (IARPA) Better Program.

A stance refers to whether a social media post is supporting or refuting a misinformation claim. Ritter and Xu are using the Stanceosaurus dataset to train a machine learning model that can quickly identify whether a tweet regarding a misinformation claim is supporting or refuting it. That helps journalists and fact checkers quickly understand if a misinformation claim is gaining support and how quickly the misinformation is spreading.

“You want to automate the prediction of stance,” Ritter said. “If you have a claim that aliens built the pyramids, and then you have a tweet that mentions some of those keywords, you want to have a machine learning model that will take the claim and the tweet as input and then make a prediction on whether the tweet is supporting or refuting the claim.

“Any tweets supporting that claim can be quickly brought to the attention of content moderators, and they could take appropriate action according to the policies of the social media company.”

The misinformation claims in Stanceosaurus originate from 15 fact-checking sources and incorporate a diverse sample of geographic regions and cultures.

While Stanceosaurus may be the largest such corpus, Wei said what makes it unique is its ability to combat misinformation multilingually.

“It’s very hard to break the precedent,” Wei said. “We had to do something much better than the precedent in order to make a difference. Misinformation is a very popular area of study. However, the quality of the prior work has various pitfalls. Most of the work falls under a single language, which is bad because misinformation is a global problem. A lot of misinformation targets minority groups.”

“Recent models do this in a language-independent way,” added Ritter. “The interesting thing about these multilingual models is that you can train them on this annotated data that’s labeled only in English, and then you can take the model and use it to make predictions on other languages.”

Wei said there are still some hurdles that are difficult to get over when considering the context or hidden meanings behind some tweets. There’s always the possibility that a statement in a tweet could be sarcastic, or someone could be asking a question, and it would be difficult for a machine to tell whether the question is being asked honestly or rhetorically.

“The difficulty is that some of the stances are a little more subtle,” Wei said. “Maybe someone phrases it like, “Is this really true?” when we know the person doesn’t believe it’s true. They phrase it as a question, but they’re still leaning toward refuting. So, there are some gray areas with these labels."

That’s why the research team used five classifications for their model — refuting, supporting, querying, discussing, and irrelevant. In the querying classification, a user may simply be asking an honest question to gain more information. In the discussing classification, one or more parties may provide neutral information on the context or veracity of the claim.

As big as Stanceosaurus is, there’s plenty of room for growth. It works across three languages, two of which Ritter and Wei chose because they had students who already knew those languages.

Ritter and Wei said research in the field of misinformation is a community effort. Their work was inspired by previous efforts, such as Google’s BERT, and they hope the research community will build upon Stanceosaurus.

“We’re very interested in expanding the languages we cover,” Wei said. “We are working on expanding it in Spanish. The Spanish-speaking community in the U.S. may be more vulnerable to misinformation. Ideally, we want to cover all the major languages in the world. Hopefully, it encourages more researchers to make a major effort to contribute to our work.”

“I think that would be great if people can have some sort of centralized repository where people could contribute new languages to Stanceosaurus,” added Ritter. “For whatever reason, no one had ever made a multilingual data set for this stance task, which is surprising to me because I think it’s important to be able to recognize misinformation across languages and cultures.”