Students Test AI Strategies in Chicken Game Competition

As the final weeks of the semester arrived in CS 3600: Introduction to Artificial Intelligence (AI), students weren’t just studying for exams; they were preparing their autonomous “chicken agents” for a head-to-head AI competition.

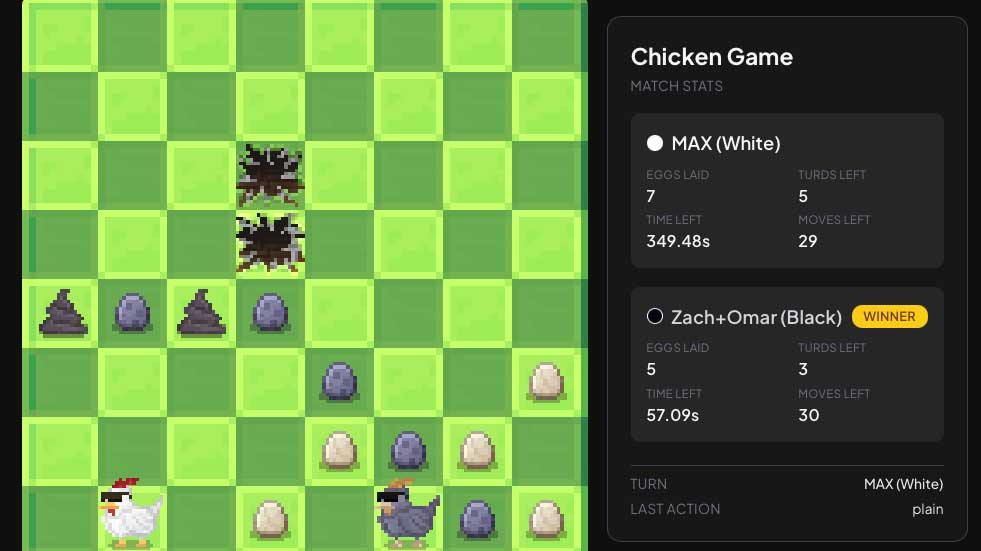

School of Computing Instruction faculty member Aaron Hillegass designed the assignment to challenge students to build an AI agent that can navigate a grid-based game, lay eggs strategically, avoid hidden trapdoors, and outsmart opponents using a mix of lessons learned in class.

To run the competition at scale, the class used ByteFight, a student-led AI competition platform that brings large-scale, head-to-head agent battles into the classroom.

For Hillegass, the project was a deliberate capstone experience.

“In any AI class, we spend much of our time talking about why techniques work, but students ultimately need to know how to use them to solve problems,” he said.

“A big open-ended problem is the best way to prepare them for the real world, where the first question is often, ‘What is the best strategy?’”

A Platform Built for Competition

In addition to its annual spring competition, ByteFight hosts course-specific challenges, including recent collaborations with CS 6601: Artificial Intelligence and CS 3600.

“We approached Professor Hillegass about running a competition similar to one we hosted for CS 6601, and he was really excited to collaborate,” said Roopjeet Singh, a second-year computer science major and member of the ByteFight team.

“He pitched us the idea of a chicken-and-egg game, and we implemented the game engine and updated the platform to scale it to hundreds of students.”

The collaboration allowed the project to scale to hundreds of students and tens of thousands of simulated matches. More than 220 students participated in over 60,000 matches during the competition. Agents were ranked using an ELO-based system, like chess rankings, that was updated as bots improved over time.

“The most exciting part was watching the rankings shift as teams continuously improved their agents,” said Jaeheon Shim, a third-year computer science major.

“Seeing underdogs pull off surprising wins in the final battles was incredibly fun.”

A Student’s Approach: Strategy, Search, and a Little Bit of Rivalry

Computer science major Jason Mo’s winning agent, “StockChicken,” focuses on placing the most eggs while blocking opponents from doing the same.

To find the best moves, Mo uses minimax with alpha-beta pruning, combined with Bayesian statistics learned in class to estimate where trapdoors might be hidden.

Before fine-tuning the strategy, the biggest challenge was simply understanding the game simulator.

“There’s some whacky stuff with how the game perspective is handled,” he said. “We had to start with a very simple bot just to make sure our foundation was strong.”

Debugging has also been its own adventure. He says it’s hard to tell if odd behavior comes from a bad heuristic or a bug in the search tree.

“I’ve definitely yelled at my chicken for running into the same trapdoor three times,” he said.

Watching his bot’s rating rise and fall has become fun. Mo also credits friendly rivalry, especially with another strong bot built by two classmates, for helping push his strategy forward.

Connecting Class Concepts to Real AI Practice

The game challenges students to grapple with uncertainty, machine learning, planning, and search, Hillegass explained. The best agents use Bayes' Rule to avoid falling through trapdoors, employ tree search to explore potential futures, and use a neural network to select the most promising options.

And seeing students’ strategies is part of the excitement.

“There’s no one more creative than a Georgia Tech student in a competitive situation,” Hillegass said. “When I see an agent doing something clever, I often download the code to look it over. It’s a constant reminder of what an honor it is to teach these amazing students.”

Advice for Future Students

Mo encourages potential future participants to experiment widely, but to begin with fundamentals.

“It's important to have a strong fundamental understanding of the game code. But it’s possible that a bot behaves poorly due to a bug in the code, and not due to a problem with the idea. So, just go out there and experiment with different options.”

Outside the classroom, students interested in AI competitions can also get involved with ByteFight by competing in its annual spring competition or joining the organization’s development team to help design future games and maintain the platform.