CoRL 2023: Large Language Models Help Humans to Communicate with Robots

Roboticists have a new way to communicate instructions to robots — large language models (LLMs).

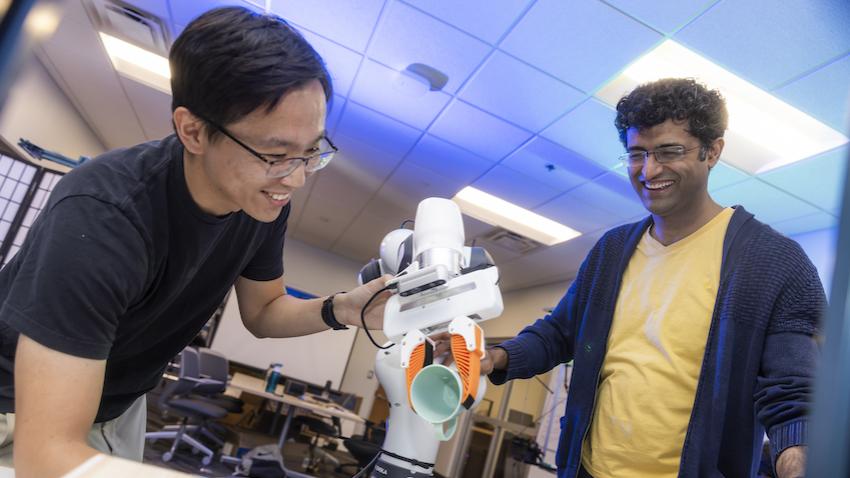

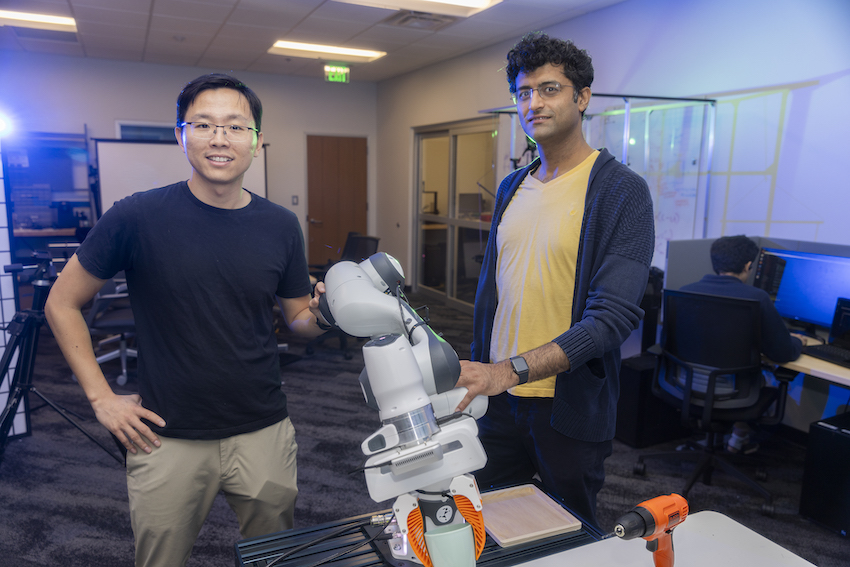

Georgia Tech robotics researchers Animesh Garg and Danfei Xu think LLMs like ChatGPT make the best human-to-robot translators when it comes to training robots.

Garg said LLMs have a healthy understanding of human language and can communicate instantly with robots by translating instructions into code. This expedites the training process by weeks and, in some cases, months.

“It’s something that has taken us by surprise,” Garg said. “These models can be applied to many tasks with little or no user input and without retraining. They don’t need specific data for the task that we need them to do. Traditional training methods take a substantial amount of time and data for training.”

For a robot to make coffee, it must understand the steps involved. It also must be familiar with different coffee machines and coffee brands — information that is obtainable on the internet.

“LLMs are going to take the job of high-level reasoning,” Xu said. “They will be part of the decision making about what needs to be done in a setting with all the knowledge a robot has about the world. ChatGPT is trained on the internet, so it knows a lot about what the world should look like and how a normal human would perform a task. It will most likely figure out a reasonable action to take to achieve a goal.”

The researchers emphasized this method is a generalized approach and does not cater to user preferences. Because LLMs tend to be biased, they will do the job, but they will do it on their own terms.

“Bias can play a role in this setting,” Xu said. “It may be more biased toward certain procedures like cooking recipes certain ways. It may be biased toward a certain way to do things instead of the preference of the human working with the robot.”

Xu said another example would be a robot tasked with tidying a living room. It may need to understand the specific room it is in, but an LLM will only provide a general understanding of a typical living room.

Garg and Xu, assistant professors in the School of Interactive Computing, are long-term collaborators, having worked on the idea since their time together at Stanford and, more recently, Nvidia.

Garg’s and Xu’s work created buzz last year when they published their main body of work on the topic. They co-authored ProgPrompt: Generating Situated Robot Task Plans using Large Language Models with Nvidia Senior Director of Robotics Research Dieter Fox, among others.

Garg said that paper has been cited over a hundred times since publication. He and Xu both worked at Nvidia when LLMs were in early development. They both saw then the impact the models would have on robotics.

The researchers tried to achieve a working communications method between humans and robots using deep learning models. However, Garg said the scope of the work was limited by the models’ lack of prior knowledge of the world. Adding LLMs to the equation supercharged the process.

“Many people have tried many different ideas on how to use this in robotics,” Garg said. “Robotics is converging to the idea that LLMs outputting code is the right thing to do. We have been pushing this idea for many years before LLMs became widely known. Program synthesis with LLMs is a very generalizable and scalable approach to robotics.”

Garg and Xu are presenting multiple papers next week at the 2023 Conference on Robot Learning (CoRL) at the Starling Hotel in Atlanta. The conference spotlights research from emerging junior faculty from academic institutions around the world.

For more information on their papers and presentation times, visit the CoRL website.