CS Student Named Google Ph.D. Fellow

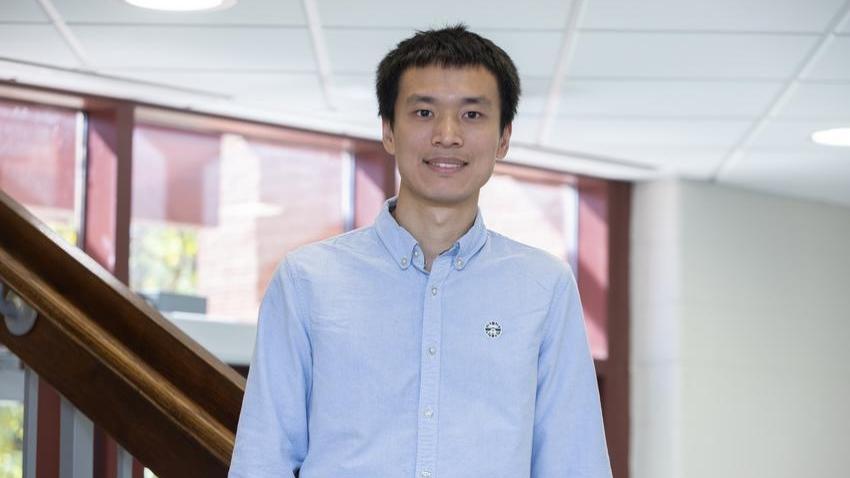

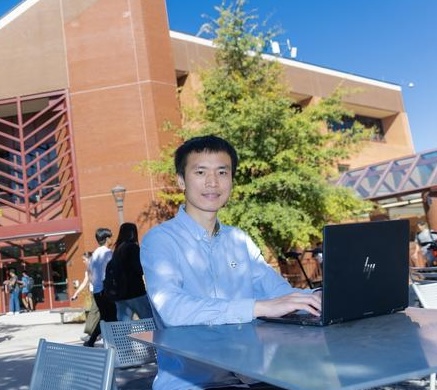

A fourth-year Ph.D. student in computer science is being recognized for work to improve the safety of artificial intelligence (AI).

The Google PhD Fellowship Program has named Tiansheng Huang as a 2025 recipient.

The fellowship recognizes outstanding graduate students conducting exceptional, innovative research in computer science and related fields, with a particular focus on candidates who seek to shape the future of technology.The program provides direct financial support and connects each fellow with a Google research mentor.

Huang researches AI safety, specifically how to develop robust safety solutions that align large language models (LLMs) with human values.

Existing safety alignment solutions for LLMs are often fragile and can be easily compromised by fine-tuning attacks. Although there has been some progress on this problem, stronger attacks can still be destructive. Huang’s research over the past few years has focused on developing more effective methods to protect models from malicious fine-tuning attacks.

“My goal is to continue designing safety alignment mechanisms that are robust to harmful fine-tuning until the protection mechanism is at least free from being destroyed by simple fine-tuning attacks,” he said.

Huang said he was drawn to researching AI safety because of its importance to society and public interest. He also found the problems in the field to be interesting to work on.

Huang said he hopes the fellowship will allow him to pursue research without an immediate impact, and that the fellowship's visibility can help raise public awareness of the importance of AI safety.

“Without a clear understanding and a robust mitigation of some harmful behaviors of the LLMs, large-scale application of LLMs into the real world will incur an unpredictable risk,” Huang said. “A robust safety alignment must be deployed to the model to avoid it from being misused by malicious users, or even by the model itself, given its increasing intelligence.”

Huang’s advisor, School of Computer Science Professor Ling Liu, said that Huang has the unique ability to tackle problems from both theoretical and practical perspectives.

“This fellowship is not only a recognition of Tiansheng’s research achievements to date but also an encouragement for him to continue to innovate in AI safety area,” Liu said.

In addition to this recognition, Huang has previously been named an outstanding reviewer at The Twelfth International Conference on Learning Representations (ICLR 2024) and a top reviewer at the Thirty-Seventh Annual Conference on Neural Information Processing Systems (NeurIPS 2023).