Research Explores Fair Grading in Large CS Classes

A team of School of Computing Instruction (SCI) lecturers is implementing grading rubrics to help ensure fair grading in large computer science (CS) classes.

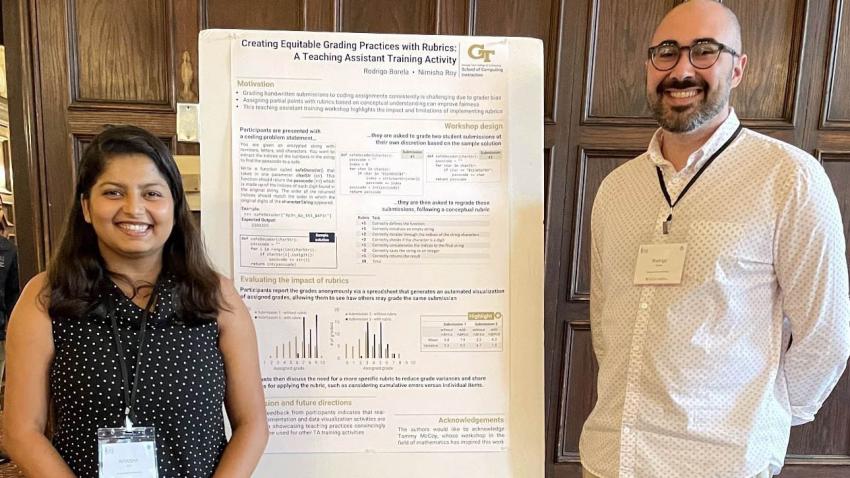

Grading coding assignments for these courses presents a unique set of challenges. The subjective nature of evaluating code correctness can lead to inconsistencies and biases among graders. SCI Lecturers Nimisha Roy and Rodrigo Borela Valente researched these challenges. They studied the effects of introducing guidelines for fair grading through a teaching assistant (TA) training workshop.

Earlier this month, they presented their poster Creating Equitable Grading Practices with Rubrics at the ACM International Computing Education Research Conference (ICER).

(Photos by Nimisha Roy)

Challenges of Grading Code in CS

Evaluating coding assignments is not straightforward, especially in large CS classes with multiple TAs. Researchers say variability in grading can arise from differences in interpretation and personal biases. This issue becomes even more pronounced when multiple TAs are involved in evaluating different submissions of the same problem, potentially leading to inconsistencies in grading. Addressing these challenges is crucial to ensuring an equitable learning environment.

The Power of Affective Rubrics in Grading

Rubrics offer a structured approach to grading that can help mitigate biases and inconsistencies. By outlining specific criteria for assessing student work, rubrics provide a common framework that graders can follow. This framework promotes objectivity and ensures fair evaluation of all students.

The team says that for rubrics to work, they must be simple, specific, and easy to understand for instructors, graders, and students. With coding assignments, they say the final output may not always reflect the student's grasp of the topics. Therefore, assigning partial points based on conceptual understanding is important rather than solely focusing on output.

TA Training Workshop

In this study, the team conducted a hands-on TA training workshop to explore the effectiveness of rubrics in grading coding assignments in a core undergraduate CS course. The workshop provided 50 TAs with training to assess the impact of rubrics on grading bias and consistency.

Participants were first given two sample incorrect solutions to a problem and asked to grade them at their discretion. In the second round, they re-graded using a rubric with assigned points to different solution components.

“In our workshop, teaching assistants graded assignments with and without these rubrics. The findings were remarkable; using the rubrics significantly reduced grading inconsistencies,” Roy said. “Additionally, we incorporated data visualizations to highlight the differences in grading, making our approach both unique and effective.”

Quantitative Analysis

The workshop incorporated data visualization to highlight the differences in grading outcomes between the two rounds. The results of each round were reported anonymously, and a real-time graph displayed the grade distribution. This allowed TAs to visualize the impact of rubrics on grading consistency, prompting discussion on biases and best practices for using rubrics.

Roy and Borela Valente say their approach to this research can extend beyond CS. The workshop’s design opens opportunities for refining teaching strategies and promoting ethical considerations in all education.