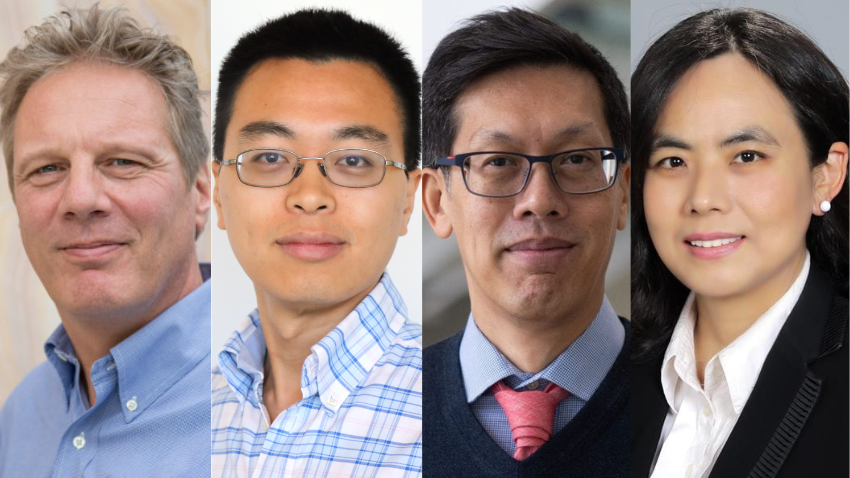

Two IC Faculty Receive NSF CAREER for Robotics and AR/VR Initiatives

Practice may not make perfect for robots, but new machine learning models from Georgia Tech are allowing them to improve their skillsets to more effectively assist humans in the real world.

Danfei Xu, an assistant professor in Georgia Tech’s School of Interactive Computing, is introducing new models that provide robots with “on-the-job” training.

The National Science Foundation (NSF) awarded Xu its CAREER award given to early career faculty. The award will enable Xu to expand his research and refine his models, which could accelerate the process of robot deployment and alleviate manufacturers from the burden of achieving perfection.

“The main problem we’re trying to tackle is how to allow robots to learn on the job,” Xu said. “How should it self-improve based on the performance or the new requirements or new user preferences in each home or working environment? You cannot expect a robot manufacturer to program all of that.

“The challenging thing about robotics is that the robot must get feedback from the physical environment. It must try to solve a problem to understand the limits of its abilities so it can decide how to improve its own performance.”

As with humans, Xu views practice as the most effective way for a robot to improve a skill. His models train the robot to identify the point at which it failed in its task performance.

“It identifies that skill and sets up an environment where it can practice,” he said. “If it needs to improve opening a drawer, it will navigate itself to the drawer and practice opening it.”

The models allow the robot to split tasks into smaller parts and evaluate its own skill level using reward functions. Cooking dinner, for example, can be divided into steps like turning on the stove and opening the fridge, which are necessary to achieve the overall goal.

“Planning is a complex problem because you must predict what’s going to happen in the physical world,” Xu said. “We use machine learning techniques that our group has developed over the past two years, using generated models to generate positive futures. They’re very good at modeling long-horizon phenomena.

“The robot knows when it’s failed because there’s a value that tells it how well it performed the task and whether it received its reward. While we don’t know how to tell the robot why it failed, we have ways for it to improve its skills based on that measurement.”

One of the biggest barriers that keeps many robots from being made available for public use is the pressure on manufacturers to make the robot as close to perfect as possible at deployment. Xu said it’s more practical to accept that robots will have learning gaps that need to be filled and to implement more efficient real-world learning models.

“We work under the pressure of getting everything correct before deployment,” he said. “We need to meet the basic safety requirements, but in terms of competence, it is difficult to get that perfect at deployment. This takes some of the pressure off because it will be able to self-adapt.”

Virtual Workspace for Data Workers

Yalong Yang, another assistant professor in the School of IC, also received the NSF CAREER Award for a research proposal that will design augmented and virtual reality (AR/VR) workspaces for data workers.

“In 10 years, I envision everyone will use AR/VR in their office, and it will replace their laptop or their monitor,” Yang said.

Yang said he is also working with Google on the project and using Google Gemini to bring conventional applications to immersive space, with data tools being the most complicated systems to re-design for immersive environments.

The immersive workspace and interface will also enable teams of data workers to collaborate and share their data in real-time.

“I want to support the end-to-end process,” Yang said. “We have visualization tools for data, but it’s not enough. Data science is a pipeline — from collecting data to processing, visualizing, modeling and then communicating. If you only support one, people will need to switch to other platforms for the other steps.”

Yang also noted that prior research has shown that VR can enhance cognitive abilities, such as memory and attention and support multitasking. The results of his project could lead to maximizing worker efficiency without them feeling strained.

“We all have a cognitive limit in our working memory. Using AR/VR can increase those limits and process more information. We can expand people’s spatial ability to help them build a better mental model of the data presented to them.”

Yang was also recently named a 2025 Google Research Scholar as he seeks to build a new artificial intelligence (AI) tool that converts mobile apps into 3D immersive environments.