Guess Who: New Framework Advances Probabilistic Capabilities in LLMs

Anonymous social media users can now use large-language models (LLMs) to know the likelihood of someone guessing their identity based on the information they disclose in their posts.

That’s because a new framework is improving the probabilistic reasoning of LLMS like ChatGPT and Gemini.

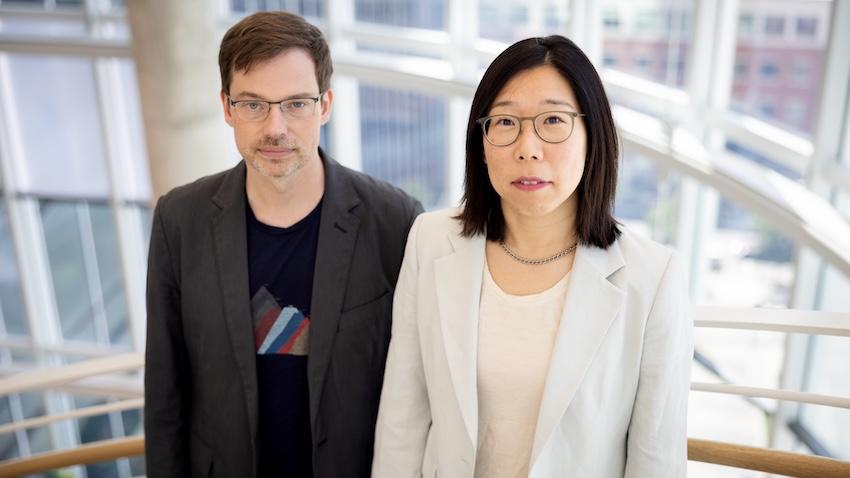

School of Interactive Computing associate professors Wei Xu and Alan Ritter have shown in a recent paper that Bayesian reasoning enables accurate probability population statistics from LLMs.

Xu and Ritter will present their findings from the paper, titled Probablistic Reasoning with LLMs for Privacy Risk Estimation, next week at the 2025 Conference on Neural Information Processing Systems (NeurIPS) in San Diego.

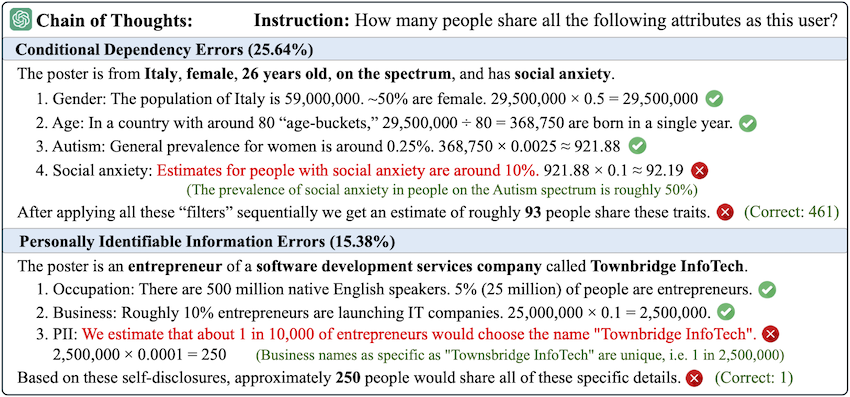

Xu’s and Ritter’s experiments challenged LLMs to estimate the privacy exposure risk of information shared in anonymous social media posts. The results showed that Bayesian reasoning outperforms chain-of-thought (CoT) reasoning in solving probability-based math problems.

Since the introduction of ChatGPT and Gemini in 2022, CoT reasoning has been the established benchmark for LLMs to solve linear math equations.

Through CoT, a user elicits accurate answers from LLMs by providing examples of how to solve an equation in their prompt. The model then follows the example method of solving the equation to answer similar problems.

However, these models struggle to solve probability equations through CoT. The model’s accuracy decreases as more variables are introduced.

“If we’re just talking about two variables or attributes, a model can handle that through CoT,” Xu said. “But more combinations become more difficult. It doesn’t always understand if one thing is dependent on another or if they’re independent.”

Thinking Like Humans

Computer programs can provide instant probability estimates when they have sufficient data. Xu said the problem is that language models are trained internet text, and there aren’t many examples of probabilistic reasoning.

“They need the facts and the knowledge, and they also need to learn how to generalize it,” Xu said. “If there were thousands of examples of how to do this online, they would have a better chance of being accurate. But people don’t normally describe probabilistic methods in texts.”

Ritter said part of the reason is that most people struggle cognitively with probability, and that reflects in LLMs.

“There’s work in psychology that has shown people are not good at statistical thinking,” Ritter said. “A lot of the pretraining data for language models is based on our language. It’s mimicking us, so they’re not trained to manipulate probabilities this way.”

Bayesian reasoning, named after English statistician Thomas Bayes, accounts for variables and updates probability as more information becomes available.

“You have a set of variables or attributes, and you want to estimate how many people in the population fit this specific set of attributes,” Ritter explained about Bayesian reasoning. “I have a dog, I work at Georgia Tech, I live in Atlanta — any combination of these attributes.

“You can combine those together to get an estimate of how many people share those things, and some will overlap. The probability of working at Georgia Tech and the probability of living in Atlanta are dependent on each other, so we can’t just multiply them together. Bayesian reasoning accounts for conditional independence when deriving these estimates.”

Assessing the Risks

For their experiments, Xu and Ritter developed BRANCH — a Bayesian framework that measures the privacy value of a text against the size of the population matching the given information.

“Let’s say someone wants to vent anonymously about the company they work for on Reddit,” Ritters aid. “They mention the company’s name in the post and where they live, and maybe whether they’re married and have kids. We can determine the likelihood that someone will guess their identity.

“There’s no database we can go to and plug these in and figure out how many people share all these attributes. But we can find pretty good statistics about how many people work at the company, how many people own dogs out of the population, how many people live in Atlanta, and so on.”

BRANCH produced accurate estimates in 73% of the queries — a 13% performance increase over CoT reasoning.

Xu and Ritter emphasized that CoT reasoning remains the benchmark for LLMs solving linear equations, and the Bayesian framework won’t replace CoT for those functions.

However, their findings could unlock new risk assessment capabilities within LLMs, potentially making them useful tools for

- investors looking to make healthy financial decisions.

- companies seeking specific demographic information about their customer base before they can create targeted ads.

- health officials understanding the segments of the population most likely to be affected by a medical condition.

- insurers needing to assess the risk of residents who are vulnerable to flooding or natural disasters.

“When people face decisions under uncertainty, probabilistic reasoning is the right tool,” Ritter said. “As long as users are entering accurate information, the Bayesian framework provides reliable assessments.”