Ph.D. Student’s Framework Used to Bolster Nvidia’s Cosmos Predict-2 Model

A new deep learning architectural framework could boost the development and deployment efficiency of autonomous vehicles and humanoid robots. The framework will lower training costs and reduce the amount of real-world data needed for training.

World foundation models (WFMs) enable physical AI systems to learn and operate within synthetic worlds created by generative artificial intelligence (genAI). For example, these models use predictive capabilities to generate up to 30 seconds of video that accurately reflects the real world.

The new framework, developed by a Georgia Tech researcher, enhances the processing speed of the neural networks that simulate these real-world environments from text, images, or video inputs.

The neural networks that make up the architectures of large language models like ChatGPT and visual models like Sora process contextual information using the “attention mechanism.”

Attention refers to a model’s ability to focus on the most relevant parts of input.

The Neighborhood Attention Extension (NATTEN) allows models that require GPUs or high-performance computing systems to process information and generate outputs more efficiently.

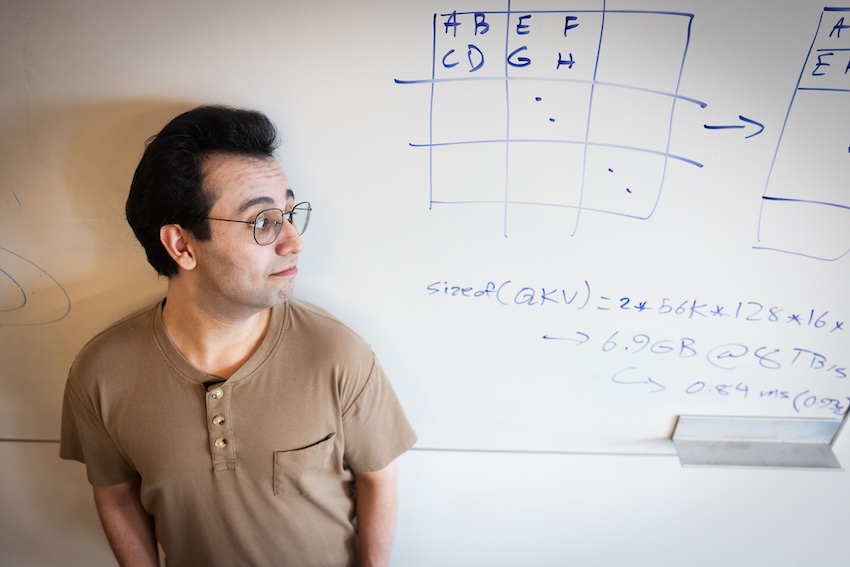

Processing speeds can increase by up to 2.6 times, said Ali Hassani, a Ph.D. student in the School of Interactive Computing and the creator of NATTEN. Hassani is advised by Associate Professor Humphrey Shi.

Hassani is also a research scientist at Nvidia, where he introduced NATTEN to Cosmos — a family of WFMs the company uses to train robots, autonomous vehicles, and other physical AI applications.

“You can map just about anything from a prompt or an image or any combination of frames from an existing video to predict future videos,” Hassani said. “Instead of generating words with an LLM, you’re generating a world.

“Unlike LLMs that generate a single token at a time, these models are compute-heavy. They generate many images — often hundreds of frames at a time — so the models put a lot of work on the GPU. NATTEN lets us decrease some of that work and proportionately accelerate the model.”

In machine learning, sparsity refers to the properties of datasets and models that contain a high proportion of zero or default values. A high level of sparsity reduces storage requirements and speeds up computations.

Hassani used NATTEN to introduce sparsity into the Cosmos Predict 2 models, increasing sparsity from 50% to 98% across the neural network layers.

“You can get pretty good acceleration just by introducing sparsity,” he said. “We can rapidly accelerate these video and world models that, comparatively to language models, are very slow. When you type something into ChatGPT, you get the first token instantly. If you want to generate a video or image, you have to look at the screen for a few seconds or even minutes.

“You can run these models with various resolutions, aspect ratios, and frame rates. The minimum speed up we deliver is 1.7x, and the maximum has been 2.6x.”

When WFMs generate predictive images and videos, they process every pixel from each input image. Then, they produce tens of thousands of probability outcomes for how each pixel might interact with others.

Hassani said NATTEN simplifies this process.

“The model can look at the probability of them interacting regardless of where those tokens are,” he said. “Those tokens could be 100 frames apart, and they’ll still interact. Neighborhood attention allows us to localize that. You don’t need to go back through all the frames, and it’s up to the person designing the model architecture to determine how much you want to localize it.”

Nvidia used NATTEN to fine-tune its prediction model, Cosmos Predict, and the company introduced the second version of the model, Cosmos Predict-2, in June.

“Someone building neural network architecture for Nvidia or another company can build this in, and it will run more efficiently,” Hassani said.

“It’s designed to benefit any neural network with compute-heavy attention.”